Understanding HTTP Protocol: A Beginner’s Guide

The HTTP (Hypertext Transfer Protocol) protocol is the foundation of data communication on the World Wide Web. From its humble beginnings with HTTP/1.0 to its advanced iterations like HTTP/3, it has evolved to meet the demands of modern web applications. Let’s dive into the basics, key concepts, and the progression of HTTP.

HTTP/1.0

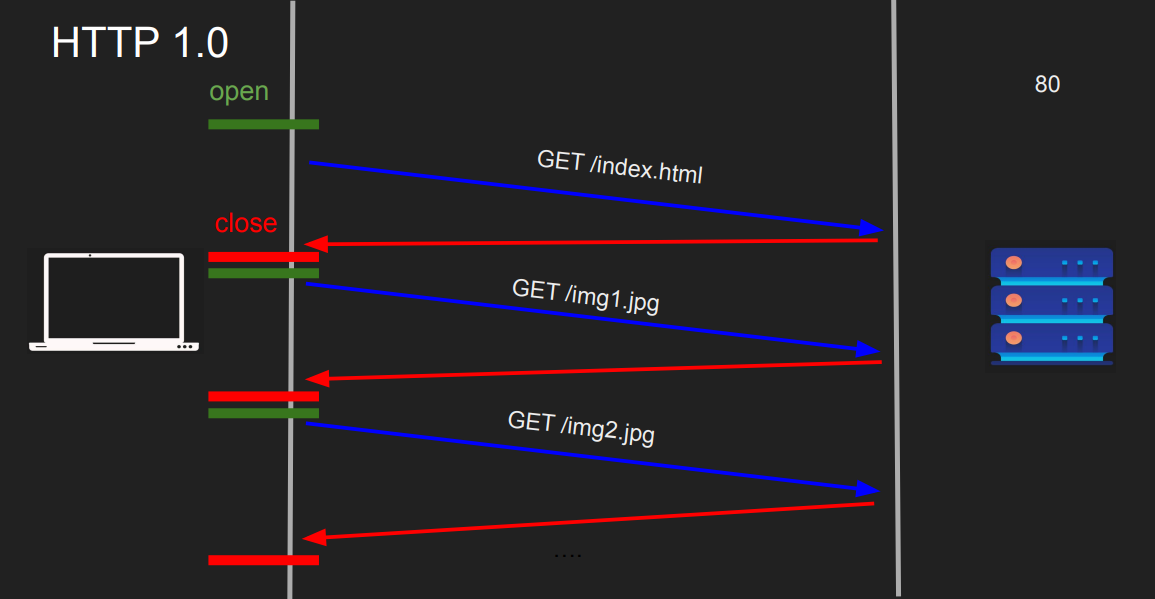

HTTP protocol are built over TCP protocol. HTTP/1.0 is a stateless protocol, meaning each request-response cycle is independent.

The server does not retain any information about previous interactions with the client.

Each HTTP/1.0 request opens a new TCP connection. Once the server sends the response, the connection is closed.

When you load a webpage, the server treats each request for HTML, CSS, JavaScript, and images as a separate interaction,

with no memory of earlier requests. While this simplifies the protocol, it can lead to inefficiencies, especially for applications

requiring state persistence (e.g., user sessions).

The HTTP/1.0 protocol is straightforward to implement; however, its simplicity comes with a drawback: high overhead caused by

frequent connection setup and teardown, which results in increased latency.

Request Format of HTTP1.0

A typical HTTP/1.0 request includes:

- Method: The action to be performed, such as:

- GET: Retrieve a resource.

- POST: Send data to the server.

- HEAD: Retrieve only headers.

- Path: The resource's location (e.g., /index.html).

- HTTP Version: Indicates the protocol version (e.g., HTTP/1.0).

- Headers: Optional metadata providing additional information (e.g., Content-Type, User-Agent).

GET /about.html HTTP/1.0

Host: example.com

User-Agent: Mozilla/5.0Response Format of HTTP1.0

The server responds with:

- Status Line:

- Protocol version (e.g., HTTP/1.0).

- Status code (e.g., 200 OK, 404 Not Found).

- Headers: Metadata about the response, such as Content-Type or Content-Length.

- Body: The requested resource or an error message.

HTTP/1.0 200 OK

Content-Type: text/html

Content-Length: 1024

<html>

<body>

<h1>Welcome to Example.com</h1>

</body>

</html>Limitations of HTTP/1.0

- Connection Overhead:

- Each request opens and closes a new TCP connection, which includes the cost of a three-way handshake and connection teardown.

- Leads to increased latency and inefficiency, especially for pages with multiple resources.

- No Persistent Connections:

- HTTP/1.0 lacks the Keep-Alive feature that allows reusing a single connection for multiple requests, increasing latency for resource-heavy pages.

- Limited Headers:

- HTTP/1.0 uses a minimal set of headers. For example, the Host header was optional, which posed challenges for hosting multiple websites on a single server (virtual hosting).

- No Built-in Caching Mechanisms:

- HTTP/1.0 had rudimentary support for caching, requiring developers to rely on custom implementations.

- Lack of Support for Chunked Transfer Encoding:

- This limitation made it impossible to stream data or send dynamically generated content without knowing the full content length beforehand.

The inefficiencies of HTTP/1.0 became apparent as the web grew. The need for faster, more efficient communication increased with the

advent of dynamic web pages and multimedia content. Hosting multiple websites on a single IP address became common, making the optional

Host header problematic.

HTTP/1.1

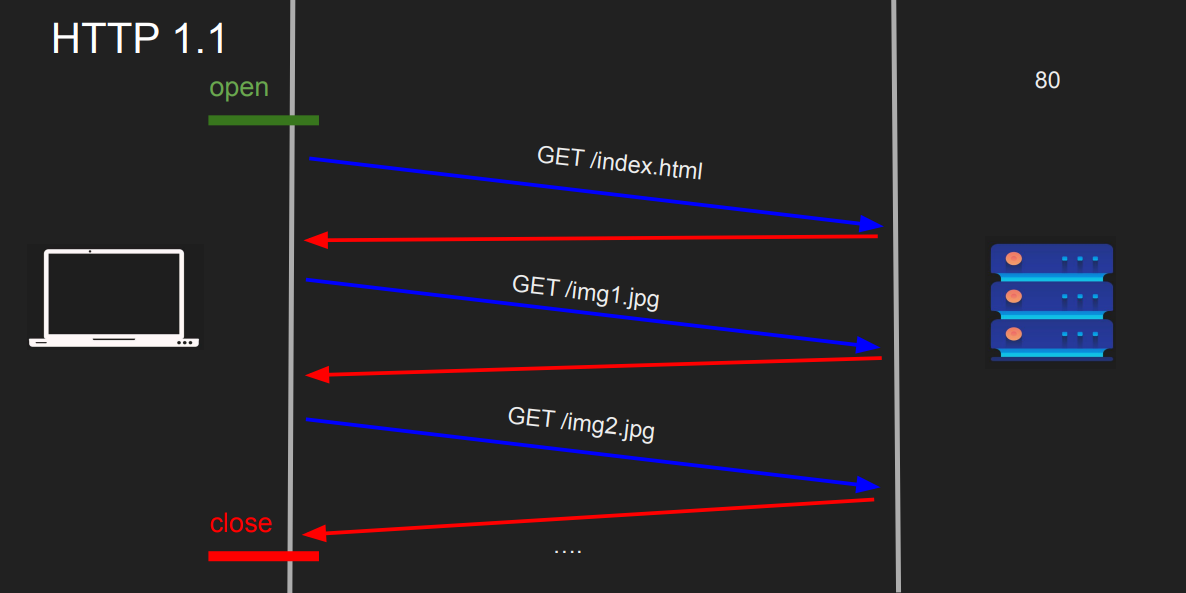

HTTP/1.1 is an improved version of HTTP/1.0 and was introduced to address many of the limitations inherent in its HTTP/1.0 .

HTTP/1.1 is one of the most widely used protocols for communication between clients (e.g., browsers) and servers on the web.

HTTP/1.1 has been in use since 1997 and brought several key features that vastly improved the efficiency and flexibility of web

communication.

Key Features and Improvements in HTTP/1.1

- Persistent Connections (Keep-Alive) One of the most significant improvements in HTTP/1.1 is the introduction of persistent connections (also known as Keep-Alive), which allows the TCP connection to remain open for multiple requests and responses. This reduces the overhead of repeatedly opening and closing TCP connections, improving performance and reducing latency.

In HTTP/1.0, a new TCP connection is established for every request. In HTTP/1.1, the connection remains open, allowing multiple

requests to be sent over the same connection. This is particularly useful for web pages with many resources

(e.g., images, stylesheets, scripts), as it avoids the cost of opening new connections for each resource.

To use persistent connections, a Connection: keep-alive header is sent in the response.

GET /image.jpg HTTP/1.1

Host: example.com

Connection: keep-alive- Pipelining

HTTP/1.1allows pipelining, where multiple HTTP requests can be sent out before waiting for the corresponding responses. This eliminates the waiting time between requests, as the server can start processing the next request while still sending the response for a previous one. However, pipelining is rarely used in practice due to issues with head-of-line blocking, where the client must wait for the response of the first request before the others can be processed correctly.

Example: A browser can send multiple requests for different resources like images, JavaScript, and CSS in a single connection without waiting for each response.

- Chunked Transfer Encoding In HTTP/1.1, chunked transfer encoding is supported, allowing the server to send data in chunks instead of knowing the content length upfront. This is especially helpful when the length of the content is not known at the time of sending the response (e.g., dynamically generated content). This allows the server to begin sending data as soon as it is ready, rather than waiting to know the entire size of the response.

The server sends a series of chunks, each with its own size, followed by a special 0-sized chunk that marks the end of the response.

HTTP/1.1 200 OK

Transfer-Encoding: chunked

Content-Type: text/html

5

<html>

2

</html>

0- Host Header

One of the most important changes in HTTP/1.1 was the introduction of the Host header, which became mandatory in HTTP/1.1 requests.

The Host header specifies the domain name of the server being requested, allowing multiple websites to be hosted on a single server

(virtual hosting).

- In HTTP/1.0, the Host header was optional, and multiple websites on the same IP address could not be supported unless each had its own IP.

- In HTTP/1.1, the Host header enables a server to serve different websites based on the domain name, even if they share the same IP address.

GET /index.html HTTP/1.1

Host: example.com

- Additional Cache Control Mechanisms

HTTP/1.1introduced more sophisticated caching mechanisms. The Cache-Control header allows clients and proxies to cache responses and prevent unnecessary re-fetching from the server. This enables more control over caching behavior, such as setting the expiration time (max-age) or defining private/public caching rules.

- Cache-Control can specify:

no-cache, no-store: Prevent caching.max-age=<seconds>: Defines the maximum age for a cached resource.public or private: Indicates whether a resource can be cached by a shared cache or a private cache.

HTTP/1.1 200 OK

Cache-Control: max-age=3600, public

- More Flexible and Comprehensive Headers

HTTP/1.1 added several new headers, enhancing flexibility and control over how requests and responses are handled:

- Range: Enables partial requests for large files, allowing the client to download only specific portions of a file (useful for resuming downloads or seeking through video files).

- Accept-Encoding: Specifies the types of encodings (such as gzip or deflate) the client supports, allowing the server to compress responses.

- Connection: Controls whether the connection should be kept alive or closed after the current transaction.

GET /video.mp4 HTTP/1.1

Range: bytes=0-1023

- Error Handling and Status Codes

HTTP/1.1 introduced several new status codes and refined some existing ones, providing more granular error handling and better

client-server communication. For example:

- 413 Payload Too Large: Indicates that the request is too large for the server to process.

- 418 I'm a teapot: A humorous code from the "April Fools' Day" RFC, not meant for serious use.

- 100 Continue: Informs the client that the server is ready to receive the request body.

- 206 Partial Content: Indicates a successful partial content request.

- 413 Payload Too Large: Indicates the request payload is too large for the server to handle.

- 414 URI Too Long: The requested URI is too long to process.

HTTP/1.1 413 Payload Too Large

Content-Type: text/plain

- Content Negotiation

HTTP/1.1 supports content negotiation, allowing servers to serve different versions of a resource based on the client's

capabilities or preferences (e.g., language, encoding, format).

- Accept: Specifies the preferred MIME types.

- Accept-Encoding: Specifies the supported compression algorithms (e.g., gzip, deflate).

- Accept-Language: Indicates the preferred language.

GET /index.html HTTP/1.1

Accept: text/html

Accept-Encoding: gzip

Accept-Language: en-US

Performance Considerations in HTTP/1.1

Despite the improvements over HTTP/1.0, HTTP/1.1 still has some performance bottlenecks:

- Head-of-Line Blocking: Even with persistent connections and pipelining, if a request in the pipeline is delayed, the entire sequence of requests is delayed.

- Limited Concurrency: HTTP/1.1 still relies on a single connection per host, which limits how many requests a client can send simultaneously without opening multiple connections. This issue led to the introduction of HTTP/2.

Comparison: HTTP/1.0 vs HTTP/1.1

| Feature | HTTP/1.0 | HTTP/1.1 |

|---|---|---|

| Connection Handling | New connection per request | Persistent connections by default |

| Host Header | Optional | Mandatory |

| Caching Mechanisms | Basic | Advanced (Cache-Control, ETag) |

| Range Requests | Not supported | Supported |

| Chunked Encoding | Not supported | Supported |

| Pipelining | Not supported | Supported (rarely used) |

HTTP/2

HTTP/2 is a major upgrade over HTTP/1.1. It was designed to address the inefficiencies of its predecessor, especially around latency, head-of-line blocking, and bandwidth usage. HTTP/2 delivers a faster and more efficient web experience while maintaining backward compatibility with HTTP/1.1.

Key Features of HTTP/2

- Binary Protocol

HTTP/2 uses a binary framing layer instead of the text-based format of HTTP/1.1. While HTTP/1.1 relies on readable headers and body data, HTTP/2 encodes these into a compact binary format for improved parsing and processing speed.

-

Multiplexing Multiplexing in HTTP/2 allows multiple HTTP requests and responses to be sent concurrently over a single TCP connection. Unlike HTTP/1.1, where only one request/response can be processed at a time per connection (or limited pipelining with head-of-line blocking), HTTP/2 enables multiple streams of data to flow independently.

- HTTP/2 communication is divided into streams, which are independent logical channels within the same physical TCP connection.

- Each stream is identified by a unique stream ID, ensuring no confusion between multiple simultaneous requests and responses.

-

Header Compression (HPACK) HTTP/2 introduces the HPACK algorithm for compressing headers. HTTP/1.1's repeated transmission of headers (e.g., User-Agent, Cookie) creates overhead, especially for small payloads.

It Encodes headers into a compact binary format. Maintains a header table shared between client and server to reuse previous header information.

-

Stream Prioritization HTTP/2 allows clients to prioritize streams by assigning dependencies and weights. For example, critical resources like HTML and CSS can be prioritized over less critical resources like background images. Each streams are assigned weights between 1 to 256. Dependencies define a parent-child relationship between streams. Example: A browser prioritizes rendering-critical CSS over JavaScript files.

-

Server Push HTTP/2 introduces server push, which allows the server to proactively send resources to the client before they are requested. When a client requests an HTML page, the server can "push" associated CSS and JavaScript files along with the response. It reduces round-trip latency for dependent resources. However, Server push adoption has been limited and is being phased out in favor of other optimization techniques.

-

Stream Independence Streams in HTTP/2 are independent, meaning if one stream faces a delay or error, it doesn’t block others. HTTP/1.1 suffers from head-of-line blocking due to strict request-response ordering. HTTP/2 allows asynchronous processing of requests and responses.

Limitations of HTTP/2

- Head-of-Line Blocking at the Transport Layer:

- Although HTTP/2 resolves application-layer head-of-line blocking, it still inherits TCP's head-of-line blocking issue. If one packet is lost, all subsequent packets must wait for its retransmission.

- Complexity:

- HTTP/2 is more complex than HTTP/1.1, increasing implementation challenges for developers.

- Server Push Limitations:

- Overuse of server push can lead to wasted bandwidth if the client already has the resource cached.

Comparison: HTTP/1.1 vs HTTP/2.0

| Feature | HTTP/1.1 | HTTP/2 |

|---|---|---|

| Protocol Type | Text-based | Binary |

| Connection Management | One request per connection (or pipelining) | Multiple requests per connection (multiplexing) |

| Header Handling | Repeated headers | Compressed headers (HPACK) |

| Stream Handling | Sequential (head-of-line blocking) | Independent streams |

| Resource Prioritization | None | Stream prioritization |

| Server Push | Not supported | Supported |