Request-Response Cycle in backend Communication

What is the Request-Response Model?

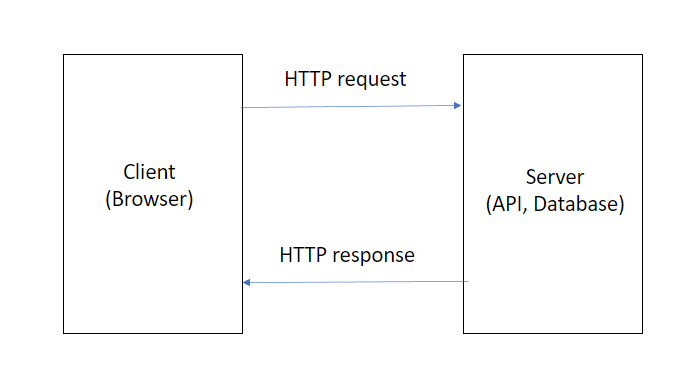

The request-response model is a fundamental communication pattern in backend systems, forming the backbone of various protocols and services across the web. In its simplest form, the request-response model involves a client sending a request to a server. The server processes the request and sends back a response. This interaction may seem straightforward, but beneath the surface lies a complex process of parsing, serialization, and network communication.

Anatomy of a Request

When a client sends a request, several critical steps take place:

-

Defining the Request: The client structures the request in a way the server understands, often involving data streams sent over protocols like TCP.

-

Parsing the Request: The server identifies the beginning and end of the request, especially when multiple requests are sent in a single stream. Parsing is computationally intensive and plays a vital role in backend performance.

-

Processing the Request: After parsing, the server executes the request, which may involve fetching data, running computations, or querying a database.

Anatomy of a Response

The response follows a similar pattern:

-

Constructing the Response: Once the request is processed, the server prepares a response, involving serialization—converting the processed data into a format like JSON or XML.

-

Sending the Response: The response travels back to the client over the network, undergoing deserialization and parsing on the client's side to make it usable.

Time Taken in Building Requests and Responses

The time required to build a request and construct a response can vary based on several factors, including data complexity, serialization methods, and network conditions.

-

Building the Request:

-

Serialization Time: Converting data structures into a transmittable format (e.g., JSON, XML) introduces overhead. The efficiency of this process depends on the serialization method and data complexity.

-

Network Latency: The time it takes for a request to travel from the client to the server is influenced by network speed and distance. High latency can significantly impact overall request time.

-

-

Constructing the Response:

-

Processing Time: Once the server receives a request, it processes it, which may involve database queries, computations, or other operations. The duration of this processing directly affects response time.

-

Deserialization Time: After processing, the server constructs a response, involving serializing the data. The efficiency of this serialization impacts the time taken to send the response back to the client.

-

-

Typical Timeframes:

-

Fast Responses: For simple requests, such as fetching a small amount of data, the entire request-response cycle can be completed in a few milliseconds. Some applications strive for response times as low as 20 milliseconds, with more complex pages taking up to 100 milliseconds.

-

Complex Operations: More complex operations, such as processing large datasets or performing intensive computations, can extend response times to several seconds. In some cases, API calls may take up to 20-30 seconds to complete, depending on the complexity of the operation and the efficiency of the backend system.

-

Optimizations

-

Efficient Serialization: Using efficient serialization formats (e.g., Protocol Buffers) can reduce the time taken to build requests and responses.

-

Asynchronous Processing: Implementing asynchronous processing can help manage longer-running tasks without blocking the main thread, improving overall responsiveness.

-

Caching: Leveraging caching mechanisms can reduce processing time for frequently requested data, thereby improving response times. PRISMIC

Real-World Applications of the Request-Response Model

The request-response pattern powers many protocols and systems:

-

HTTP: The foundation of the web, HTTP is a classic request-response protocol. Clients send GET, POST, or other requests to servers, which respond with the required resources.

-

DNS: When resolving domain names, DNS servers use a request-response mechanism to provide IP addresses.

-

APIs: REST, GraphQL, and SOAP APIs operate on this model, allowing clients to query data or perform actions on servers.

-

SQL Queries: Databases follow a similar protocol, where clients send SQL queries and receive results as responses.

Optimizing the Request-Response Model

While the model is efficient, there are areas for optimization:

-

Serialization: Formats like Protocol Buffers are faster and more compact than JSON or XML.

-

Chunking: For large files, dividing data into smaller chunks can improve reliability and reduce resource consumption.

-

Reducing Latency: Minimizing the time spent on network transfers, parsing, and processing can significantly enhance performance.

Limitations and Alternatives

The request-response model isn't ideal for every scenario. In real-time applications like chat or notifications, polling for updates can strain networks. Alternative patterns like WebSockets or server-sent events (SSE) provide better solutions for such cases.

Conclusion

The request-response model is a fundamental building block of backend systems, offering a robust framework for client-server communication. Understanding its intricacies—from parsing and serialization to optimization techniques—empowers engineers to build efficient and scalable applications.